Conceptualizing the Processing Model for the GCP Dataflow Service

"softddl.org"

4-12-2020, 11:53

-

Share on social networks:

-

Download for free: Conceptualizi

-

Janani Ravi | Duration: 3h 52m | Video: H264 1280x720 | Audio: AAC 48 kHz 2ch | 496 MB | Language: English + .srt

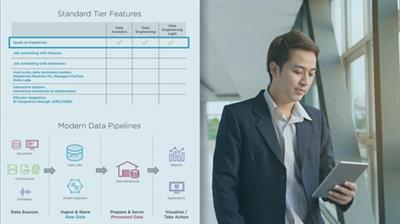

Dataflow represents a fundamentally different approach to Big Data processing than computing engines such as Spark. Dataflow is serverless and fully-managed, and supports running pipelines designed using Apache Beam APIs.

Dataflow allows developers to process and transform data using easy, intuitive APIs. Dataflow is built on the Apache Beam architecture and unifies batch as well as stream processing of data. In this course, Conceptualizing the Processing Model for the GCP Dataflow Service, you will be exposed to the full potential of Cloud Dataflow and its innovative programming model.

Janani Ravi | Duration: 3h 52m | Video: H264 1280x720 | Audio: AAC 48 kHz 2ch | 496 MB | Language: English + .srt Dataflow represents a fundamentally different approach to Big Data processing than computing engines such as Spark. Dataflow is serverless and fully-managed, and supports running pipelines designed using Apache Beam APIs. Dataflow allows developers to process and transform data using easy, intuitive APIs. Dataflow is built on the Apache Beam architecture and unifies batch as well as stream processing of data. In this course, Conceptualizing the Processing Model for the GCP Dataflow Service, you will be exposed to the full potential of Cloud Dataflow and its innovative programming model. First, you will work with an example Apache Beam pipeline performing stream processing operations and see how it can be executed using the Cloud Dataflow runner. Next, you will understand the basic optimizations that Dataflow applies to your execution graph such as fusion and combine optimizations. Finally, you will explore Dataflow pipelines without writing any code at all using built-in templates. You will also see how you can create a custom template to execute your own processing jobs. When you are finished with this course, you will have the skills and knowledge to design Dataflow pipelines using Apache Beam SDKs, integrate these pipelines with other Google services, and run these pipelines on the Google Cloud Platform. Buy Premium From My Links To Get Resumable Support,Max Speed & Support Me

Janani Ravi | Duration: 3h 52m | Video: H264 1280x720 | Audio: AAC 48 kHz 2ch | 496 MB | Language: English + .srt Dataflow represents a fundamentally different approach to Big Data processing than computing engines such as Spark. Dataflow is serverless and fully-managed, and supports running pipelines designed using Apache Beam APIs. Dataflow allows developers to process and transform data using easy, intuitive APIs. Dataflow is built on the Apache Beam architecture and unifies batch as well as stream processing of data. In this course, Conceptualizing the Processing Model for the GCP Dataflow Service, you will be exposed to the full potential of Cloud Dataflow and its innovative programming model. First, you will work with an example Apache Beam pipeline performing stream processing operations and see how it can be executed using the Cloud Dataflow runner. Next, you will understand the basic optimizations that Dataflow applies to your execution graph such as fusion and combine optimizations. Finally, you will explore Dataflow pipelines without writing any code at all using built-in templates. You will also see how you can create a custom template to execute your own processing jobs. When you are finished with this course, you will have the skills and knowledge to design Dataflow pipelines using Apache Beam SDKs, integrate these pipelines with other Google services, and run these pipelines on the Google Cloud Platform. Buy Premium From My Links To Get Resumable Support,Max Speed & Support Me  https://uploadgig.com/file/download/ee1230aF5c817990/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar

https://uploadgig.com/file/download/ee1230aF5c817990/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar  https://rapidgator.net/file/ce3745cc787d6dbecb3e22848d688dfe/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar.html

https://rapidgator.net/file/ce3745cc787d6dbecb3e22848d688dfe/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar.html  http://nitroflare.com/view/0A097B7603DE0F6/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar

http://nitroflare.com/view/0A097B7603DE0F6/7nro1.Conceptualizing.the.Processing.Model.for.the.GCP.Dataflow.Service.rar

The minimum comment length is 50 characters. comments are moderated