Udemy - Azure Data Engineering - Build Ingestion Framework

"softddl.org"

19-08-2021, 07:14

-

Share on social networks:

-

Download for free: Udemy -

-

Created by David Charles Academy | Last updated 7/2021

Duration: 5h20m | 2 sections | 43 lectures | Video: 1280x720, 44 KHz | 1.99 GB

Genre: eLearning | Language: English + Sub

Created by David Charles Academy | Last updated 7/2021

Duration: 5h20m | 2 sections | 43 lectures | Video: 1280x720, 44 KHz | 1.99 GB

Genre: eLearning | Language: English + Sub

Learn Azure Data Engineering by building a Metadata-driven Ingestion Framework as an industry standard

What you'll learn

Azure Data Factory

Azure Blob Storage

Azure Gen 2 Data Lake Storage

Azure Data Factory Pipelines

Data Engineering Concepts

Data Lake Concepts

Metadata Driven Frameworks Concepts

Industry Example on How to build Ingestion Frameworks

Dynamic Azure Data Factory Pipelines

Email Notifications with Logic Apps

Tracking of Pipelines and Batch Runs

Version Management with Azure DevOps

Show more

Show less

Requirements

Basic PC / Laptop

Description

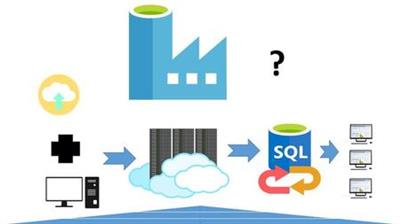

The main objective of this course is to help you to learn Data Engineering techniques of building Metadata-Driven frameworks with Azure Data Engineering tools such as Data Factory, Azure SQL, and others.

\n

Building Frameworks are now an industry norm and it has become an important skill to know how to visualize, design, plan and implement data frameworks.

\n

The framework that we are going to build together is referred to as the Metadata-Driven Ingestion Framework.

\n

Data ingestion into the data lake from the disparate source systems is a key requirement for a company that aspires to be data-driven, and finding a common way to ingest the data is a desirable and necessary requirement.

\n

Metadata-Driven Frameworks allows a company to develop the system just once and it can be adopted and reused by various business clusters without the need for additional development, thus saving the business time and costs. Think of it as a plug-and-play system.

\n

The first objective of the course is to onboard you onto the Azure Data Factory platform to help you assemble your first Azure Data Factory Pipeline. Once you get a good grip of the Azure Data Factory development pattern, then it becomes easier to adopt the same pattern to onboard other sources and data sinks.

\n

Once you are comfortable with building a basic azure data factory pipeline, as a second objective we then move on to building a fully-fledged and working metadata-driven framework to make the ingestion more dynamic, and furthermore, we will build the framework in such a way that you can audit every batch orchestration and individual pipeline runs for business intelligence and operational monitoring.

\n

Creating your first Pipeline

\n

What will be covered is as follows;

1. Introduction to Azure Data Factory

2. Unpack the requirements and technical architecture

3. Create an Azure Data Factory Resource

4. Create an Azure Blob Storage account

5. Create an Azure Data Lake Gen 2 Storage account

6. Learn how to use the Storage Explorer

7. Create Your First Azure Pipeline.

\n

Metadata Driven Ingestion

1. Unpack the theory on Metadata Driven Ingestion

2. Describing the High-Level Plan for building the User

3. Creation of a dedicated Active Directory User and assigning appropriate permissions

4. Using Azure Data Studio

5. Creation of the Metadata Driven Database (Tables and T-SQL Stored Procedure)

6. Applying business naming conventions

7. Creating an email notifications strategy

8. Creation of Reusable utility pipelines

9. Develop a mechanism to log data for every data ingestion pipeline run and also the batch itself

10. Creation of a dynamic data ingestion pipeline

11. Apply the orchestration pipeline

12. Explanation of T-SQL Stored Procedures for the Ingestion Engine

13. Creating an Azure DevOps Repository for the Data Factory Pipelines

\n

\n

\n

Who this course is for:Aspiring Data EngineersDevelopers that are curious about Azure Data Factory as an ETL alternative

Buy Premium From My Links To Get Resumable Support,Max Speed & Support Me

https://uploadgig.com/file/download/f19143de1aff3b1d/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part1.rar

https://uploadgig.com/file/download/4b5e0063a418578C/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part2.rar

https://rapidgator.net/file/210415d4424a7c1c5fc92919f94ac08b/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part1.rar.html

https://rapidgator.net/file/149ec89712507f24d9261e1f58adf86c/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part2.rar.html

http://nitro.download/view/91C9B7EF5DEEE85/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part1.rar

http://nitro.download/view/3EBD4FCE311AD4A/yvw0u.Azure.Data.Engineering..Build.Ingestion.Framework.part2.rar

Links are Interchangeable - No Password - Single Extraction

The minimum comment length is 50 characters. comments are moderated

![Udemy - Azure Databricks Master Program [real time scenarios + Labs]](https://i114.fastpic.ru/big/2021/0322/d6/9b79424e0ac9f6a89644acb76b863ed6.jpeg)